Deploying a Serverless Hugging Face LLM with AWS Lambda

Introduction This write up demonstrates how to build a conversational application similar to ChatGPT—using a pre-trained language model from Hugging Face and deployed on AWS. By linking the model to a React interface, it allows for question submission online and immediate AI-generated answers. Summary Model Development on AWS SageMaker: A machine learning model is prepared in AWS SageMaker using a Jupyter notebook. After training (or selecting a pre-trained model), it’s deployed as an inference endpoint on SageMaker. Serverless Deployment with AWS Lambda: An AWS Lambda function is created to act as a bridge between the user’s request and the SageMaker endpoint. When a query comes in: The Lambda function receives and processes the input. It forwards the query to the SageMaker endpoint. It then returns the model’s response to the user. Permissions and IAM Setup The Lambda function needs the right IAM role to talk to SageMaker without issues. Grant it the necessary permissions so it can securely interact with the endpoint. The AWS documentation on IAM roles for Lambda outlines exactly how to configure these privileges. Pre-Trained Model Deployment Instead of training a model from scratch, you can use Hugging Face’s ready-made models and deploy them to SageMaker. This approach accelerates the process since you’re starting with a model that already understands language patterns. If you’d like a step-by-step guide, refer to Hugging Face’s AWS integration tutorials. Frontend: React Application A straightforward React app serves as the user interface: Users type in questions or prompts. These queries are sent to the Lambda function through its Function URL. The Lambda function gets a response from the SageMaker endpoint. Finally, the React app displays the response for the user. Deployment The React app can be published on GitHub Pages for free public access. Alternatively, you can use AWS (such as S3 and CloudFront) if you’d like more customization. Be aware that hosting on AWS may involve some costs. Clone the Repository: git clone https://github.com/ife-gsaola/hugging-face-llm.git

Introduction

This write up demonstrates how to build a conversational application similar to ChatGPT—using a pre-trained language model from Hugging Face and deployed on AWS. By linking the model to a React interface, it allows for question submission online and immediate AI-generated answers.

Summary

- Model Development on AWS SageMaker: A machine learning model is prepared in AWS SageMaker using a Jupyter notebook. After training (or selecting a pre-trained model), it’s deployed as an inference endpoint on SageMaker.

- Serverless Deployment with AWS Lambda: An AWS Lambda function is created to act as a bridge between the user’s request and the SageMaker endpoint. When a query comes in:

- The Lambda function receives and processes the input.

- It forwards the query to the SageMaker endpoint.

- It then returns the model’s response to the user.

Permissions and IAM Setup

The Lambda function needs the right IAM role to talk to SageMaker without issues. Grant it the necessary permissions so it can securely interact with the endpoint. The AWS documentation on IAM roles for Lambda outlines exactly how to configure these privileges.

Pre-Trained Model Deployment

Instead of training a model from scratch, you can use Hugging Face’s ready-made models and deploy them to SageMaker. This approach accelerates the process since you’re starting with a model that already understands language patterns. If you’d like a step-by-step guide, refer to Hugging Face’s AWS integration tutorials.

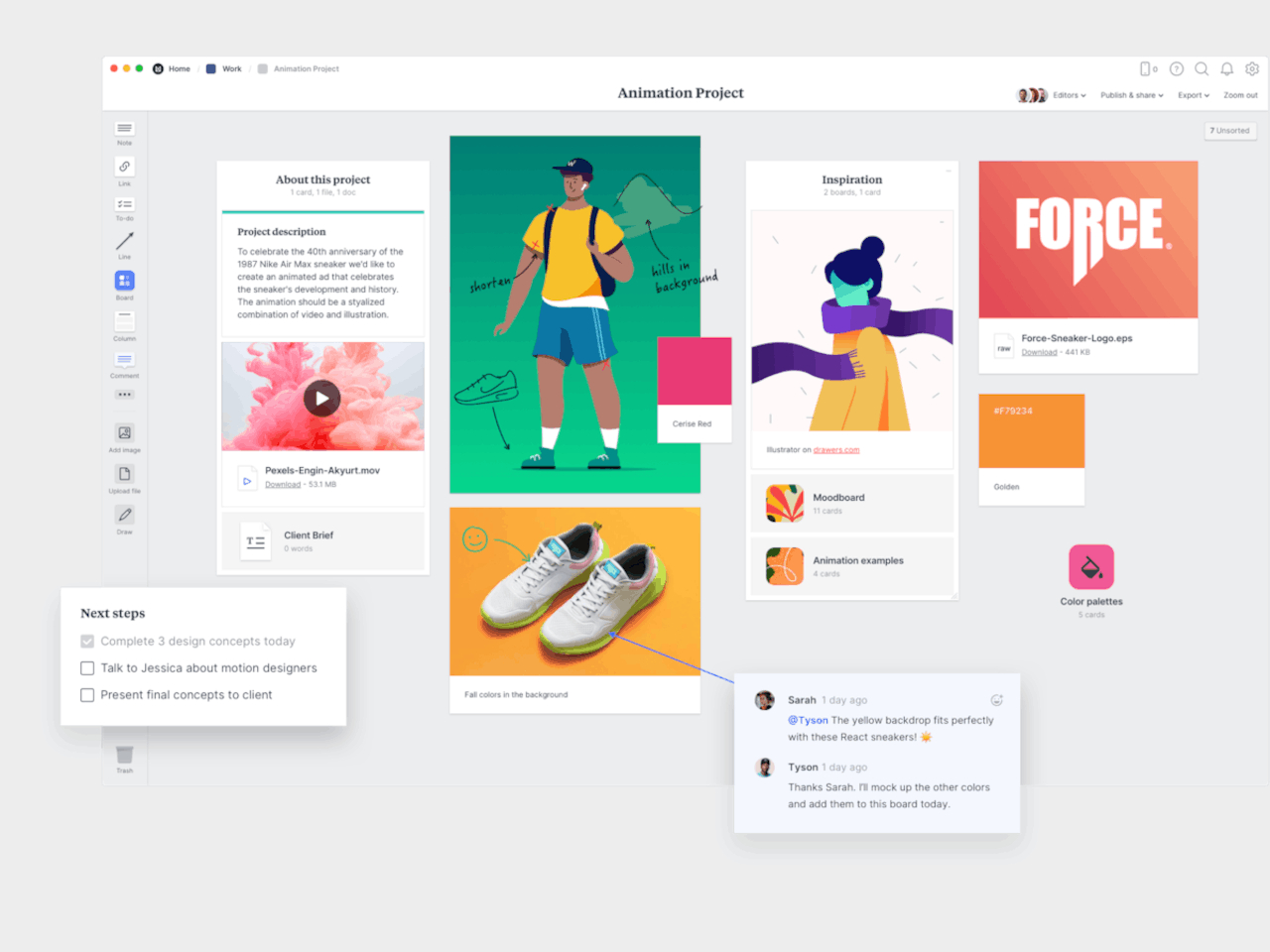

Frontend: React Application

A straightforward React app serves as the user interface:

- Users type in questions or prompts.

- These queries are sent to the Lambda function through its Function URL.

- The Lambda function gets a response from the SageMaker endpoint.

- Finally, the React app displays the response for the user.

Deployment

The React app can be published on GitHub Pages for free public access. Alternatively, you can use AWS (such as S3 and CloudFront) if you’d like more customization. Be aware that hosting on AWS may involve some costs.

Clone the Repository: git clone https://github.com/ife-gsaola/hugging-face-llm.git

![[UPDATE] PlayStation Network is back and running](https://helios-i.mashable.com/imagery/articles/02ToD4H7iBKJXGD8xpj3dxR/hero-image.fill.size_1200x675.v1739025331.jpg)