Sundial: A New Era for Time Series Foundation Models with Generative AI

Time series forecasting presents a fundamental challenge due to its intrinsic non-determinism, making it difficult to predict future values accurately. Traditional methods generally employ point forecasting, providing a single deterministic value that cannot describe the range of possible values. Although recent deep learning methods have improved forecasting precision, they require task-specific training and do not […] The post Sundial: A New Era for Time Series Foundation Models with Generative AI appeared first on MarkTechPost.

Time series forecasting presents a fundamental challenge due to its intrinsic non-determinism, making it difficult to predict future values accurately. Traditional methods generally employ point forecasting, providing a single deterministic value that cannot describe the range of possible values. Although recent deep learning methods have improved forecasting precision, they require task-specific training and do not generalize across seen distributions. Most models place strict parametric assumptions or utilize discrete tokenization, which can give rise to out-of-vocabulary issues and quantization errors. Overcoming these constraints is key to creating scalable, transferable, and generalizable time series forecasting models that can function across domains without extensive re-training.

Current forecasting models can be roughly divided into two categories: statistical models and deep learning-based models. Statistical models, such as ARIMA and Exponential Smoothing, are interpretable but cannot capture the complex dependencies of large datasets. Transformer-based deep learning models display impressive predictive ability; however, they are not robust, require extensive in-distribution training, and are extremely dependent on discrete tokenization. This tokenization scheme, used in frameworks such as TimesFM, Timer, and Moirai, embeds time series data into categorical token sequences, discarding fine-grained information, rigid representation learning, and potential quantization inconsistencies. In addition, most forecasting models rely on prior probabilistic distributions, such as Gaussian priors, that limit their ability to capture the rich and highly variable nature of real-world data. These constraints limit the ability of existing methods to provide accurate and reliable probabilistic forecasts that adequately reflect uncertainty in decision-making applications.

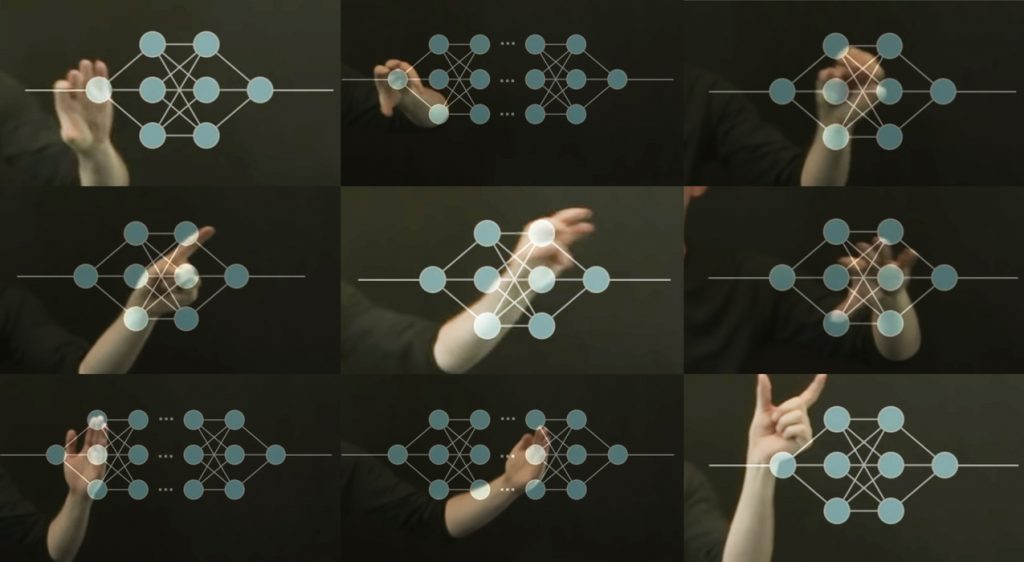

To overcome these challenges, Sundial proposes a generative, scalable, and flexible time series foundation model that can learn complex patterns from raw data directly. In contrast to discrete tokenization, it uses continuous tokenization with native patching, which maintains time series continuity and enables more expressive representation learning. One of the innovations behind its forecasting power is TimeFlow Loss, a flow-matching-based generative training objective, which can enable the model to learn predictive distributions without probabilistic assumptions beforehand. This approach avoids mode collapse and enables multiple plausible future trajectories instead of a single deterministic prediction. In addition, the model is trained on TimeBench, a large-scale dataset of one trillion time points sampled from real-world and synthetic time series, which endows it with strong generalization capabilities on a wide range of forecasting tasks.

Sundial combines several innovations in tokenization, architecture, and training methods. Its native patching-based continuous tokenization system processes time series data as continuous segments rather than segmenting them into discrete categorical tokens. A re-normalization method enhances generalizability by managing variability in the dataset and distribution shifts. The basic architecture is a decoder-only Transformer that uses causal self-attention and rotary position embeddings, which improve its ability to manage temporal dependencies. Training stability and inference efficiency are enhanced through Pre-LN, FlashAttention, and KV Cache optimizations. The introduction of TimeFlow Loss enables probabilistic forecasting through flow-matching, allowing the model to learn non-parametric distributions without being constrained by fixed assumptions. Rather than producing a single-point estimate, the model produces multiple possible outcomes, thus improving decision-making processes in uncertain environments. Training is conducted on TimeBench, a trillion-scale dataset covering topics in finance, weather, IoT, healthcare, and more, thus ensuring wide applicability and strength across a broad range of domains.

Sundial achieves state-of-the-art performance on a variety of zero-shot forecasting benchmarks, reflecting superior accuracy, efficiency, and scalability. In the context of long-term forecasting, it outperforms previous time series foundation models consistently, reflecting substantial reductions in Mean Squared Error and Mean Absolute Error. In probabilistic forecasting, Sundial is one of the top-performing models, reflecting excellence in key metrics such as MASE and CRPS while having a substantial advantage in terms of inference speed. The scalability of the model is evident, with larger configurations leading to better accuracy, and TimeFlow Loss reflecting greater effectiveness compared to standard MSE- or diffusion-based objectives. Sundial also provides flexible inference capabilities, allowing users to trade off computational efficiency and forecasting accuracy, which makes it particularly useful for practical applications requiring reliable and adaptive time series forecasts.

Sundial is a significant breakthrough in time series forecasting with a generative modeling framework that combines continuous tokenization, Transformer models, and a novel probabilistic training objective. With TimeFlow Loss, it surpasses conventional parametric forecasting methods by learning highly flexible and unconstrained predictive distributions. When trained on the trillion-scale TimeBench dataset, it achieves state-of-the-art on a variety of forecasting tasks with strong zero-shot generalization. Its ability to generate multiple plausible future trajectories, combined with its efficiency, makes it a powerful decision-making tool in many industries, thereby reimagining the promise of time series foundation models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 75k+ ML SubReddit.

![[UPDATE] PlayStation Network is back and running](https://helios-i.mashable.com/imagery/articles/02ToD4H7iBKJXGD8xpj3dxR/hero-image.fill.size_1200x675.v1739025331.jpg)