How distributed training works in Pytorch: distributed data-parallel and mixed-precision training

Learn how distributed training works in pytorch: data parallel, distributed data parallel and automatic mixed precision. Train your deep learning models with massive speedups.

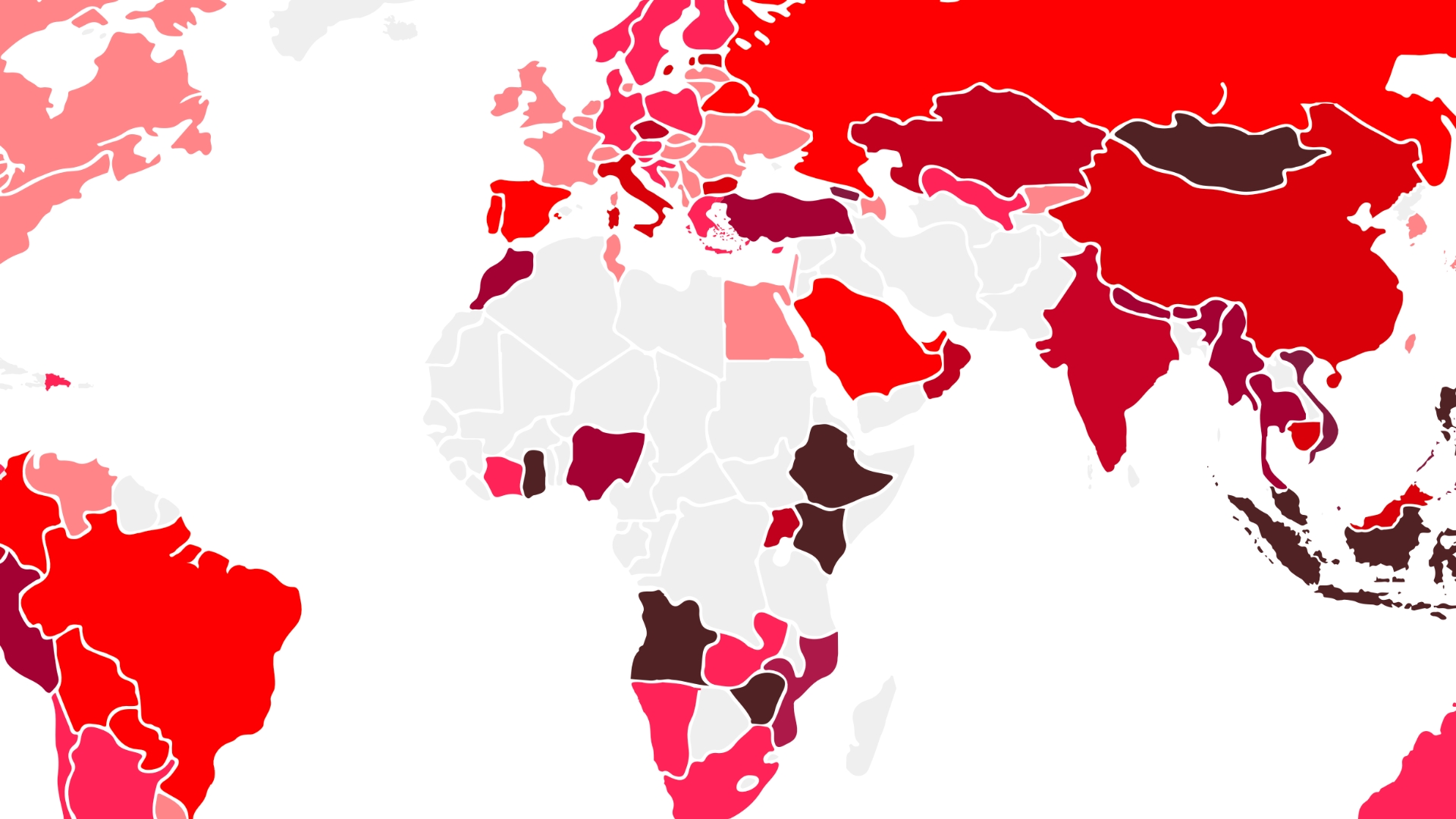

![[The AI Show Episode 133]: DeepSeek, US vs. China AI War, Anthropic CEO: AI Could Surpass Humans by 2027, OpenAI Plans AI for Advanced Coding, & Meta’s 1.3 Million GPUs](https://www.marketingaiinstitute.com/hubfs/ep%20133%20cover.png)