Navigating the Challenges of Multimodal AI Data Integration

The rapid advancements in artificial intelligence (AI) are driving innovations. Multimodal AI is an advanced form of AI that processes multiple data types simultaneously, such as video, audio, image, text, and even sensory inputs. In contrast to unimodal systems that typically work with one type of data (i.e. text-only or image-only), multimodal AI integrates information… Continue reading Navigating the Challenges of Multimodal AI Data Integration The post Navigating the Challenges of Multimodal AI Data Integration appeared first on Cogitotech.

The rapid advancements in artificial intelligence (AI) are driving innovations. Multimodal AI is an advanced form of AI that processes multiple data types simultaneously, such as video, audio, image, text, and even sensory inputs. In contrast to unimodal systems that typically work with one type of data (i.e. text-only or image-only), multimodal AI integrates information from various data sources to gain comprehensive insights into its environment, enhancing its ability to perform human-like analysis of complex situations, thereby improving decision-making accuracy and efficiency.

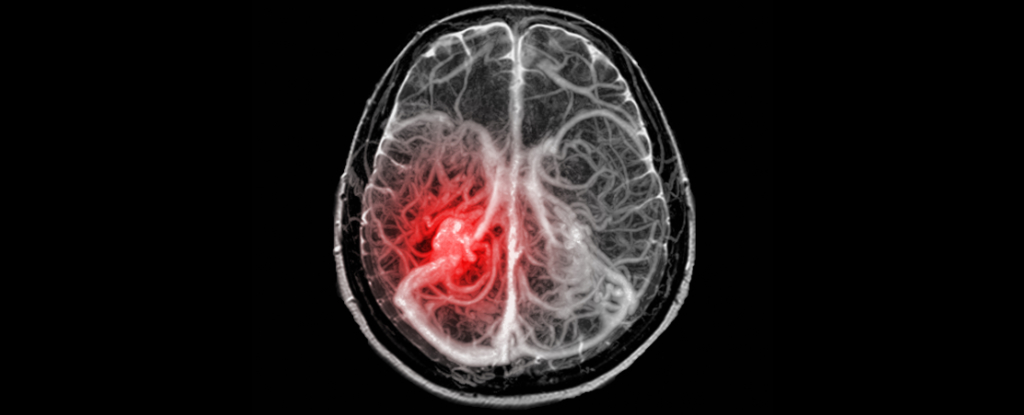

While multimodal AI has a variety of applications in various fields, such as healthcare, entertainment, and fashion, it faces several technical challenges in integrating and processing diverse data types, as well as ethical concerns related to bias, fairness, and the responsible use of data arising from such integration. These challenges have crucial implications for various aspects of AI models, such as user interaction, business deployment, and regulation. Developers, policymakers and users must understand these challenges when working with multimodal AI systems.

In this article, we will explore the technical challenges in integrating different data types into multimodals, the associated ethical considerations, and how to address them carefully to ensure responsible and effective use of AI technology.

Understanding Multimodal AI Data Integration

Multimodal AI systems mimic human understanding by processing multiple types of information. Just as humans use different expressions — like words, tone, facial expression, and body language — multimodal AI processes various types of data, such as text, image, audio, video, etc. to better understand a situation and offer a better, more nuanced interpretation than a single data type could provide.

Multimodal systems combine a network of interconnected components, each managing different aspects of data processing using sophisticated machine learning algorithms and neural networks. For example, images are processed by computer vision technology, while textual data are handled by an NLP (Natural Language Processing) model.

The fusion model, the central part of multimodal AI, integrates data from different modalities into one unified representation. This is crucial for synthesizing diverse information, enabling AI to make more accurate and contextual decisions.

Multimodal Data Integration Challenges

While multimodal AI exhibits enhanced decision-making capabilities and has various applications, from healthcare and finance to transportation and entertainment, where different data types allow for deeper analysis, prediction, and problem-solving, this AI system faces several technical and ethical challenges.

Technical Challenges

Noisy Multimodal Data: The primary challenge is learning to control or mitigate the impact of arbitrary noise (unwanted or misleading information) in multimodal datasets. High-dimensional data (varied data types) tends to contain complex or diverse forms of noise, which makes it difficult to analyze and use them. The heterogeneity of data from multiple sources presents challenges but also requires exploring the correlation among different modalities to identify and reduce potential noise.

Incomplete Multimodal Data: One of the fundamental challenges is developing systems that can learn from multimodal data when some modalities are missing. For example, when a dataset is expected to have images and text, some entries may contain only the text, leading to incomplete multimodal datasets. Building an adaptable and reliable learning model that can work efficiently with incomplete data is still challenging.

Computational Requirements for Multimodal AI: Multimodal AI systems require substantial computational resources to process and analyze large volumes of data from multiple modalities. These systems rely on high-performance hardware like Graphics Processing Units (GPUs), which can efficiently handle complex and parallel computations, and Tensor Processing Units (TPUs), specialized processors for machine learning tasks. As a result, some organizations face challenges in adopting multimodal AI.

Data Alignment: Combining data from multiple sources poses significant challenges due to differences in data format (i.e., text vs audio), timing (differences in timing of video and audio) and interpretation. However, for multimodal AI systems to function effectively, it is critical to ensure that data is synchronized and accurately integrated.

Accuracy and Reliability: It is essential to ensure that AI models generate accurate and reliable output, particularly in critical fields like healthcare and self-driving cars. However, the complex process of integrating diverse data types amplifies the likelihood of errors or inconsistencies in the final output. Additionally, when complex individual modal systems are merged into one multimodal system, it increases the system’s opacity, making it more challenging to identify errors, biases or anomalies.

Moreover, the integration of diverse modalities makes it harder to ensure that the information aligns and makes sense together. A mismatch between modalities or intermodal incoherence can lead to conflicts or ambiguities, with information from one source contracting to another.

Ethical Challenges

There are several ethical challenges associated with data integration in multimodal AI systems.

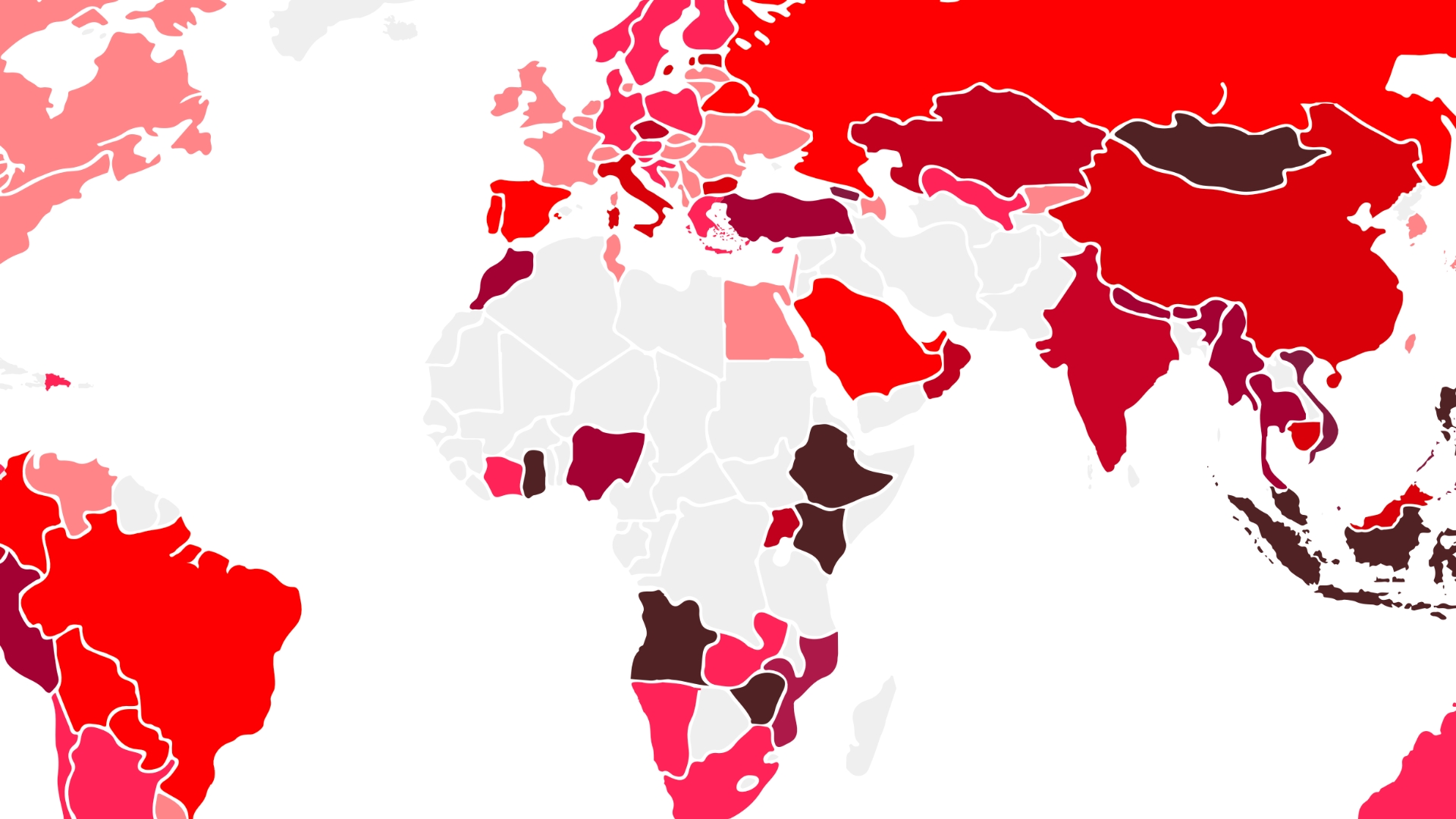

Bias and Fairness: The inherent biases in training data are one of AI’s most critical ethical issues. Biased data that doesn’t include information about all demographics or groups may create skewed multimodal systems. For example, a virtual assistant trained predominantly on female voice datasets may struggle to work with male voices.

Privacy Concerns: Multimodal AI is trained on diverse data types, including personal information—such as images, voice recordings, videos, and text—extracted from various sources. Combining different types of data, such as visual, text, and voice, could increase the risk of exposing personally identifiable information compared to using a single data type.

Transparency: As these AI systems integrate various data types and apply complex algorithms to process and analyze them, understanding the decision-making process of these models is difficult, raising concerns about transparency and accountability.

Strategies for Effective Multimodal Data Integration

Here are a few effective methods that can be implemented to overcome the various technical and ethical issues faced when integrating data in multimodal AI.

Tackling Technical Challenges

Enhanced Computational Efficiency: Since AI models are computationally intensive, it is essential to develop smaller and simpler AI models that can function efficiently without needing much computational power and also optimize current models to achieve the same success with fewer resources.

Innovative Data Integration Techniques: Developers are working on techniques to integrate diverse data types. For example, creating more sophisticated neural network architecture, such as hybrid models that combine different types of layers —specialized techniques, such as convolutional layers for image and recurrent layers for sequential data like text —to efficiently handle and process multiple data types simultaneously.

Improving Accuracy and Reliability: To address the challenges associated with multimodal AI data, sophisticated techniques like advanced error-detection algorithms and robust validation processes are employed to ensure accuracy and reliability. These models are designed to improve themselves over time based on new data inputs. This keeps them up-to-date and accurate.

Ethical Guidelines

Bias Mitigation Protocols: It is essential to develop protocols and guidelines to identify and mitigate biases in multimodal AI training data and algorithms. Bias can be addressed by using diverse datasets, conducting routine bias audits and incorporating fairness metrics in the development process.

For example, Cogito’s red teaming service is an innovative approach to thoroughly evaluating the performance of multimodal AI systems, including adversarial testing, vulnerability analysis, bias auditing, and response refinement.

Privacy Protection Standards: To address privacy concerns in multimodal AI, it is critically important to implement guidelines and rules for handling sensitive data. Follow practices like data anonymization, secure data storage, and federated learning to safeguard data from unauthorized access or breaches while preserving the utility. AI systems must also adhere to privacy laws and regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act).

Supervised Learning: Multimodal AI systems have significantly progressed, especially using supervised learning techniques. These models can be trained on labeled or annotated data to address ethical issues and enhance performance.

Cogito Tech is one of the leading AI training data companies, providing accurately labeled, annotated, and curated multimodal AI training data. Our DataSum, a” Nutrition Facts’-style framework for AI training data, signals a high standard in data handling and management for transparent governance, compliance, fairness and inclusivity.

Final Words

Multimodal AI will become more advanced and prominent across various sectors, from healthcare to entertainment. However, integrating data in these systems poses several technical and ethical challenges that must be addressed to boost performance while upholding principles of equity and integrity, ultimately enhancing human efficiency and well-being.

The post Navigating the Challenges of Multimodal AI Data Integration appeared first on Cogitotech.