The big switch to DeepSeek is hitting a snag

AI startups can't find fast enough DeepSeek model services, stalling the big switch to the buzzy open-source Chinese large language model.

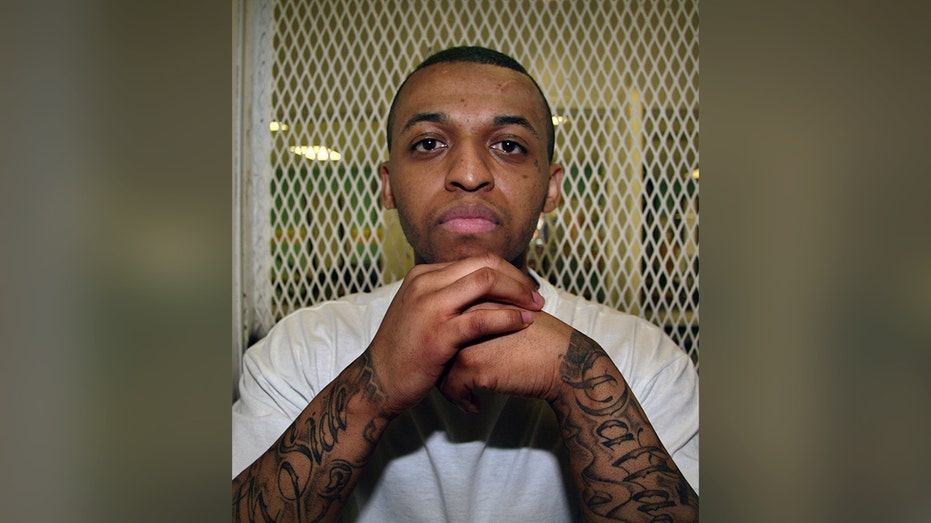

Artur Widak/NurPhoto

- AI startups are clamoring for consistent, secure access to DeepSeek's large language models.

- Cloud providers are having trouble offering it at usable speeds and DeepSeek's own API is hampered.

- The troubles are delaying the switch to the low-cost AI that rocked markets last week.

DeepSeek may have burst into the mainstream with a bang last week, but US-based AI businesses trying to use the Chinese company's AI models are having a host of troubles.

"We're on our seventh provider," Neal Shah, CEO of Counterforce Health, told Business Insider.

Counterforce, like many startups, accesses AI models through APIs provided by cloud companies. These APIs charge by the token — the unit of measure for inputs and outputs of large language models. This allows costs to scale with usage when companies are young and can't afford to pay for expensive, dedicated computing capacity they might not fully use.

Right now, the company's service, which uses AI to generate responses to insurance claim denials, is free for individuals and in pilot tests with healthcare providers, so getting costs down as far as possible is paramount. DeepSeek's open model was a game-changer.

Since late January, Shah's team tried and struggled with six different API providers. The seventh, Fireworks AI, has been just consistent enough, Shah said. The others were too slow or unreliable.

Artificial Analysis, a website that tracks the availability and performance of AI models across cloud providers, showed seven clouds were running DeepSeek models on Wednesday. Most were running at one-third of the speed of DeepSeek's own API, except for Fireworks AI, which is about half the speed of the Chinese service.

Many businesses are concerned about sharing data with a Chinese API and prefer to use it through a US provider. But many API providers are struggling to offer consistent access to the full DeepSeek models at fast enough speeds for them to be useful.

The companies measured by Artificial Analysis batch providers together for AI inference to improve prices and use computing resources more efficiently. Companies with dedicated computing capacity — especially Nvidia's H200 chips — likely won't struggle. And those willing to pay hyperscaler cloud prices may find it reliable and easier to get.

The Chinese company that rocked markets so thoroughly because it was cheaper to build and much cheaper to run than Western alternatives — was touted as a booster pack and a leveler for the entire AI startup ecosystem. A few weeks into what was anticipated as a mass conversion, that shift isn't as easy as it may have seemed.

DeepSeek did not respond to BI's request for comment.

DeepSeek at speed is hard to find

Theo Browne would like to use DeepSeek, but he can't find a good source. Through his company Ping, Browne makes AI tools for software developers.

He started testing DeepSeek's models in December, when the company released V3, and found that he could get comparable or better results for one-fifteenth the price of proprietary models like Anthropic's Claude.

When the rest of the world caught wind in mid-January, options for accessing DeepSeek became inconsistent.

"Most companies are offering a really bad experience right now. Browne told BI. "It's taking 100 times longer to generate a response than any traditional model provider," he said.

Browne went straight to DeepSeek's API instead of using a US-based cloud, which wouldn't be an option for a more security-concerned company.

But then DeepSeek's China-hosted API went down on January 26 and has yet to be restored to full function. The company blamed a malicious attack and has been working to resolve it.

Attack aside, the reasons for slow and spotty service could also be because clouds don't have powerful enough hardware to run the large model — using more, weaker hardware further increases the complexity and slows speed. The immense uptick in demand could impact speed and reliability too.

Baseten, a company that provides mostly dedicated AI computing capacity to clients, has been working with DeepSeek and an outside research lab for months to get the model running well. CEO Tuhin Srivastava told BI that Baseten had the model running faster than DeepSeek's API before the attack.

Several platforms are also taking advantage of DeepSeek's technical prowess by running smaller versions or using DeepSeek's R1 reasoning model to "distill" other open-source models like Meta's Llama. That's what Groq, an aspiring Nvidia competitor and inference provider, is doing. The company signed up 15,000 new users within the first 24 hours of offering the hybrid model and more than 37,000 organizations have used the model so far, Chief Technology Evangelist Mark Heaps told BI.

Unseen risks

For businesses that can get access to high-speed DeepSeek models, there are other reasons to hesitate.

Pukar Hamal, CEO of software security startup Security Pal, has apprehension about the security of Chinese AI models and said he's concerned about using DeepSeeek's models for business, even if they're run locally on-premises or via a US-based API.

"I run a security company so I have to be super paranoid," Hamal told BI. A cheap Chinese model may be an attractive option for startups looking to get through the early years and scale. But if they want to sell whatever they're building to a large enterprise customer a Chinese model is going to be a liability, he said.

"The moment a startup wants to sell to an enterprise, an enterprise wants to know what your exact data architecture system looks like. If they see you're heavily relying on a Chinese-made LLM, ain't no way you're gonna be able to sell it," Hamal said.

He's convinced the DeepSeek moment was hype.

"I think we'll effectively stop talking about it in a couple of weeks," he said.

But for a lot of companies, the low cost is irresistible and the security concern is minimal — at least in the early stages of operation.

Shah, for one, is anonymizing user information before his software calls any model so that patients' identities remain secure.

"Frankly, we don't even fully trust Anthropic and other models. You don't really know where the data is going," Shah said.

DeepSeek's price is irresistible

Counterforce is a somewhat lucky fit for DeepSeek while it is in its awkward toddler phase. The startup can put a relatively large amount of data into the model and isn't too worried about output speed since patients are happy to wait a few minutes for a letter that could save them hundreds of dollars.

Shah is also developing an AI-enabled tool that will call insurance companies on behalf of patients. That means integrating language, voice, and listening models at the speed of conversation. For that to work and be cost-effective, DeepSeek's availability and speed need to improve.

Several cloud providers told BI they are actively working on it and developers have not stopped clamoring, said Jasper Zhang, cofounder and CEO of cloud service Hyperbolic.

"After we launched the new DeepSeek model, we saw inference users increase by 150%," Zhang said.

Fireworks, one of the few cloud services to consistently provide decent performance said January's new users increased 400% month over month.

Together AI cofounder and CEO Vipul Ved Prakash told BI the company is working on a fix that may improve speed this week.

Zhang is on the case too. His goal is to democratize access to AI so that any startup or individual can build with it. He said open-source models are quickly catching up to proprietary ones.

"R1 is a real killer," Zhang said. Still, DeepSeek's teething troubles leave a window for others to enter and the longer DeepSeek is hard to access, the higher the chance the next big open model could come to take its place.

Have a tip or an insight to share? Contact Emma at ecosgrove@businessinsider.com or use the secure messaging app Signal: 443-333-9088