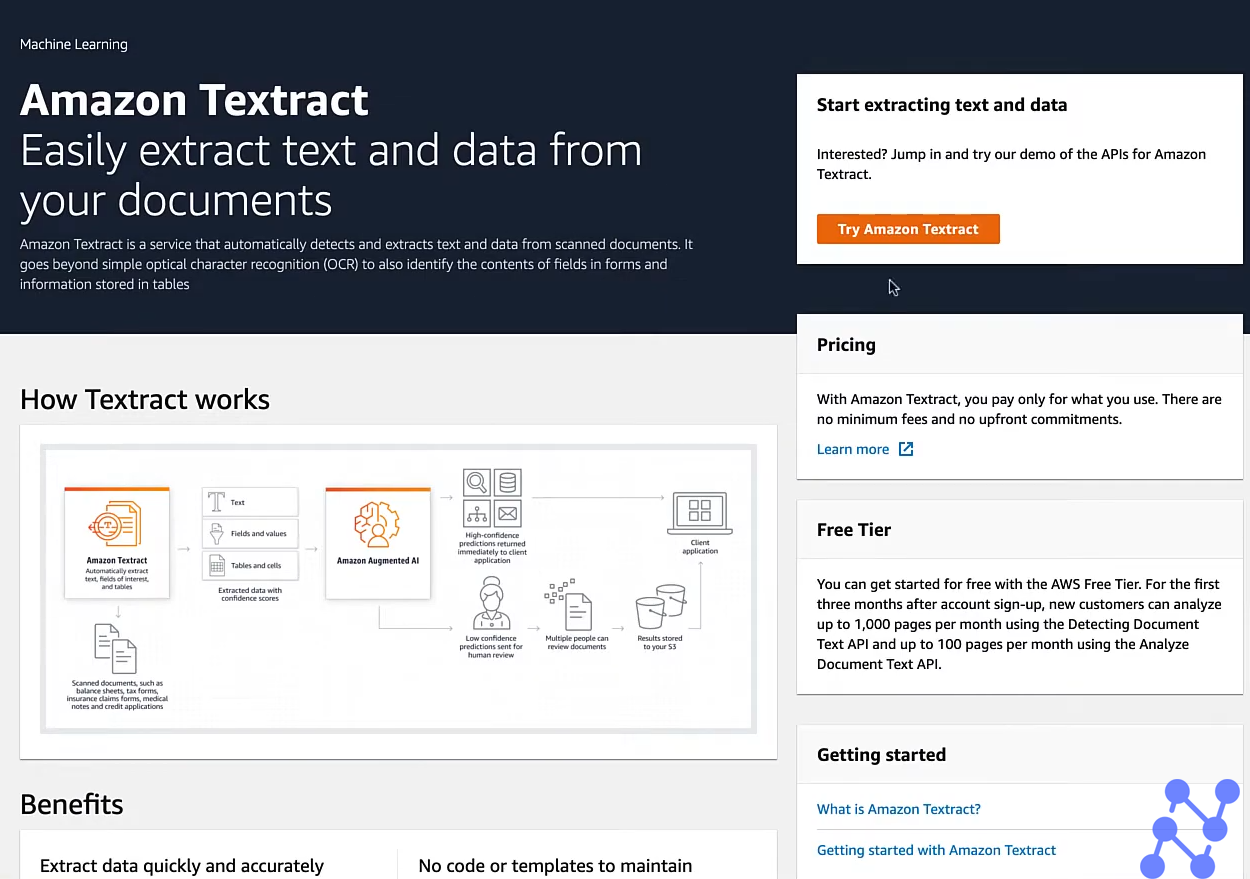

How Is Kubernetes Revolutionizing Scalable AI Workflows in LLMOps?

Learn how Kubernetes enables scalable, efficient LLMOps and AI workflows. The post How Is Kubernetes Revolutionizing Scalable AI Workflows in LLMOps? appeared first on Spritle software.

Introduction

The advent of large language models (LLMs) has transformed artificial intelligence, enabling organizations to innovate and solve complex problems at an unprecedented scale. From powering advanced chatbots to enhancing natural language understanding, LLMs have redefined what AI can achieve. However, managing the lifecycle of LLMs—from data pre-processing and training to deployment and monitoring—presents unique challenges. These challenges include scalability, cost management, security, and real-time performance under unpredictable traffic conditions.

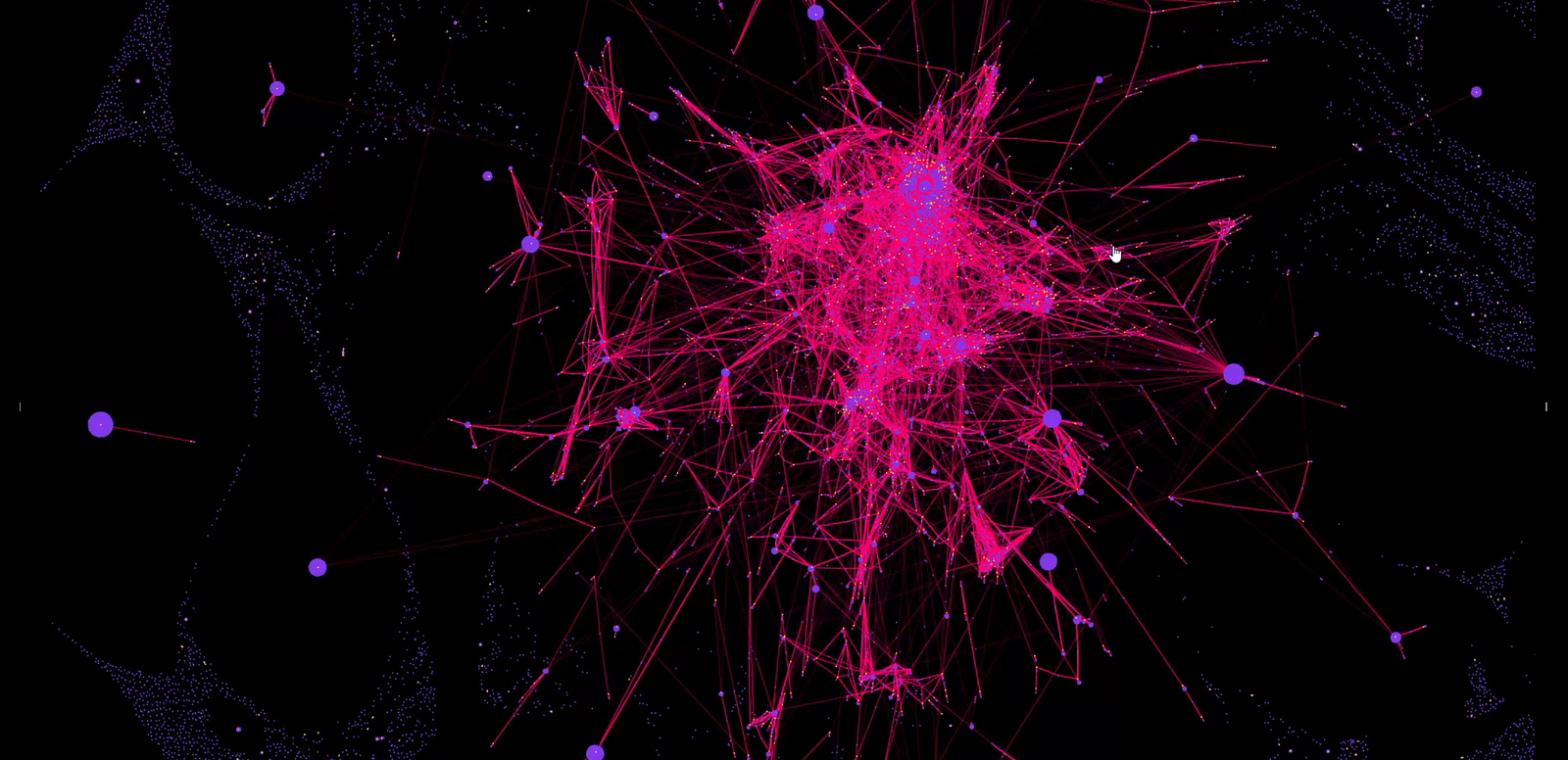

1. Kubernetes: A Game-Changer for LLMOps

Kubernetes, the leading container orchestration platform, has emerged as the cornerstone of Large Language Model Operations (LLMOps), enabling organizations to tackle these challenges efficiently. Here’s an in-depth exploration of how Kubernetes empowers LLMOps with its modular architecture, robust orchestration capabilities, and a rich ecosystem of tools.

Why Kubernetes Stands Out

Kubernetes is more than just a container orchestration platform—it is a robust foundation for running complex workflows at scale. Its modular and declarative design makes it an ideal fit for LLMOps. Organizations can encapsulate the various components of LLM workflows, such as data preprocessing pipelines, model servers, and logging systems, into isolated Kubernetes pods. This encapsulation ensures that each component can scale independently, be updated seamlessly, and perform optimally without disrupting other parts of the workflow.

Modularity and Isolation

Encapsulation also improves maintainability. For instance, a preprocessing pipeline responsible for cleaning and tokenizing data can operate independently from a model inference pipeline, ensuring updates to one do not interfere with the other. This modularity becomes particularly critical in large-scale systems where frequent changes and optimizations are the norm.

2. Scalability: Handling the Unpredictable

Dynamic Workload Management

The modularity of Kubernetes is complemented by its unparalleled scalability, making it ideal for LLM workloads characterized by variable traffic. For instance, a surge in user queries to an LLM-powered chatbot can quickly overwhelm static infrastructure. Kubernetes addresses this through:

- Horizontal Pod Autoscaling (HPA): Dynamically adjusts the number of pods based on metrics like CPU and memory usage. When demand spikes, HPA spins up additional inference pods to handle the load.

- Cluster Autoscaler: Automatically modifies the cluster size by adding or removing nodes to maintain optimal performance and cost-efficiency.

Real-World Example

Consider a customer support chatbot deployed using an LLM. During a product launch, user interactions surge significantly. Kubernetes ensures that the system scales effortlessly to accommodate the increased traffic, avoiding downtime or degraded performance.

3. Serving Models at Scale

Seamless Model Deployment

Deploying and serving large language models for real-time inference is a critical challenge, and Kubernetes excels in this domain. By leveraging tools like TensorFlow Serving, PyTorch Serve, and FastAPI, developers can expose model endpoints via RESTful APIs or gRPC. These endpoints integrate easily with downstream applications to perform tasks like text generation, summarization, and classification.

Deployment Strategies

Kubernetes supports advanced deployment strategies such as:

- Rolling Updates: Deploy new model versions incrementally, ensuring minimal downtime.

- Blue-Green Deployments: Direct traffic to a new version (blue) while keeping the old version (green) available as a fallback.

These strategies ensure continuous availability, enabling organizations to iterate and improve their models without disrupting user experience.

4. Efficient Data Preprocessing

Parallel Execution with Jobs and CronJobs

Data preprocessing and feature engineering are integral to LLM workflows, involving tasks like cleaning, tokenizing, and augmenting datasets. Kubernetes-native tools handle these processes efficiently:

- Jobs: Enable parallel execution of large-scale preprocessing tasks across multiple nodes, reducing processing time.

- CronJobs: Automate recurring tasks, such as nightly dataset updates or periodic feature extraction pipelines.

Improved Throughput

The parallelism offered by Kubernetes ensures that preprocessing does not become a bottleneck, even for massive datasets, making it a valuable tool for real-time and batch workflows alike.

5. High Availability and Resilience

Ensuring Uptime

High availability is a cornerstone of LLMOps, and Kubernetes delivers this with multi-zone and multi-region deployments. By distributing workloads across multiple availability zones, Kubernetes ensures that applications remain operational even in the event of localized failures. Multi-region deployments provide additional resilience and improve latency for global users.

Service Mesh Integration

Service meshes like Istio and Linkerd enhance the resilience of Kubernetes deployments by:

- Managing inter-component communication.

- Providing features like load balancing, secure communication, and traffic shaping.

This ensures robust and fault-tolerant communication between components in complex LLM workflows.

6. Security and Compliance

Protecting Sensitive Data

Security is paramount in LLMOps, especially when handling sensitive data such as personal or proprietary information. Kubernetes provides several built-in features to secure LLM deployments:

- Role-Based Access Control (RBAC): Enforces fine-grained permissions to limit access to critical resources.

- Network Policies: Restrict communication between pods, reducing the attack surface.

- Secrets Management: Securely stores sensitive information like API keys and database credentials.

Compliance for Sensitive Applications

For industries like healthcare and finance, compliance with regulations such as GDPR and HIPAA is essential. Kubernetes’ robust security features make it easier to meet these requirements, ensuring data is handled responsibly.

7. Monitoring and Observability

Maintaining System Health

Monitoring and observability are essential for maintaining the performance of LLM systems. Kubernetes offers a rich ecosystem of tools for this purpose:

- Prometheus and Grafana: Provide detailed metrics and visualizations for resource usage, model latency, and error rates.

- Jaeger and OpenTelemetry: Enable distributed tracing, allowing teams to diagnose bottlenecks and latency issues across workflows.

Custom Metrics for LLMs

Inference servers can export custom metrics, such as average response time or token generation speed, providing insights tailored to the specific requirements of LLM-powered applications.

8. Leveraging Specialized Hardware

GPU and TPU Support

LLMs are computationally intensive, often requiring GPUs or TPUs for training and inference. Kubernetes makes it simple to manage these resources:

- GPU/TPU Scheduling: Ensures efficient allocation to pods requiring high-performance computing.

- Device Plugins: Expose accelerators to containers, optimizing hardware utilization.

Flexible Resource Allocation

Organizations can prioritize GPUs for training while reserving CPUs for lighter inference tasks, ensuring cost-effective resource utilization.

9. Automating ML Pipelines

Streamlined Operations with Kubeflow and Argo

Continuous retraining and fine-tuning are essential for adapting LLMs to evolving data and requirements. Kubernetes supports this with:

- Kubeflow: Provides an end-to-end ecosystem for machine learning, from data ingestion to serving.

- Argo Workflows: Orchestrates complex pipelines using Directed Acyclic Graphs (DAGs), simplifying multi-step workflows.

Efficient Automation

These tools reduce manual effort, accelerate model iteration, and ensure workflows are reproducible and reliable.

10. Scalable Storage and Data Management

Persistent Storage

Kubernetes integrates seamlessly with storage solutions like Amazon EFS, Google Persistent Disk, and on-premises NFS. This enables large-scale training or inference workloads to access data without bottlenecks.

Managing Checkpoints and Logs

Kubernetes-native storage integrations simplify the management of checkpoints and logs, crucial for debugging and tracking model performance.

11. Portability Across Cloud and On-Premises

Hybrid and Multi-Cloud Strategies

Kubernetes provides unmatched portability, allowing LLM workloads to move seamlessly between cloud providers or on-premises data centers. Tools like Velero and Kasten offer backup and restore capabilities, ensuring disaster recovery and business continuity.

Federated Kubernetes

Federated clusters enable centralized management across multiple regions, simplifying global deployments and enhancing flexibility.

12. Accelerating Development with AI Platforms

Pre-Built Integrations

Modern AI platforms like Hugging Face Transformers and OpenAI APIs integrate seamlessly with Kubernetes, enabling rapid development and deployment of LLM-powered solutions.

Example Use Cases

Using Hugging Face’s Transformers library, organizations can deploy state-of-the-art models for tasks like sentiment analysis or summarization with minimal effort.

Conclusion

Kubernetes has redefined the landscape of LLMOps by providing a scalable, resilient, and secure platform for managing large language models. Its modular architecture, rich orchestration features, and robust ecosystem of tools empower organizations to overcome the challenges of LLM deployment at scale. By leveraging Kubernetes, businesses can ensure their AI solutions remain performant, cost-effective, and adaptable to evolving demands. As AI continues to advance, Kubernetes stands as a critical enabler of innovation and operational excellence in the field of large language models.

The post How Is Kubernetes Revolutionizing Scalable AI Workflows in LLMOps? appeared first on Spritle software.

![Marijuana’s hidden threat to fertility and family planning [PODCAST]](https://kevinmd.com/wp-content/uploads/The-Podcast-by-KevinMD-WideScreen-3000-px-1-scaled.jpg)