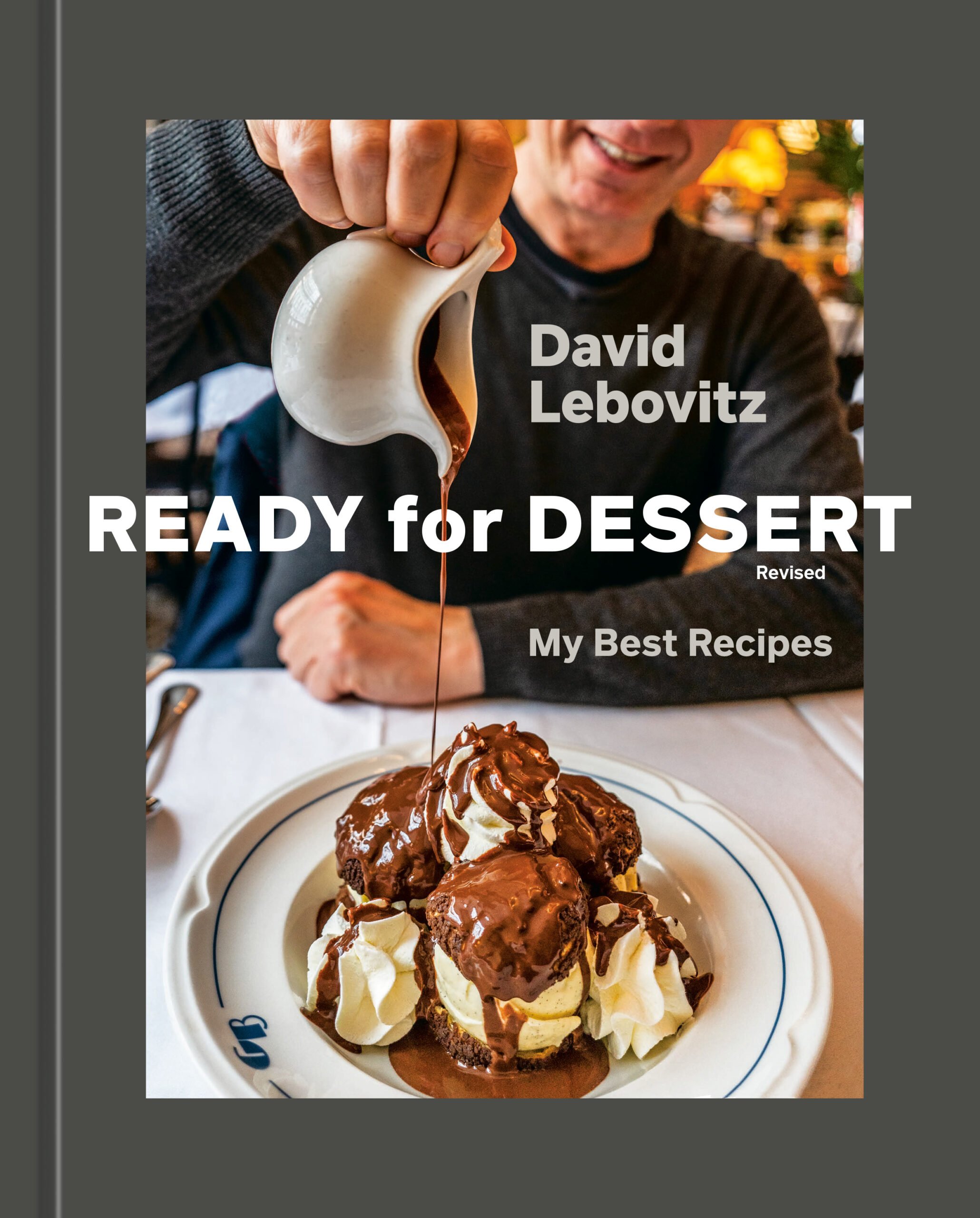

PSA: Google is now automatically adding watermarks to images edited with Magic Editor

Photo: Abby Ferguson Tools to authenticate images and disclose the use of AI are hot topics right now. Just last week, content delivery giant Cloudflare announced it was joining Adobe's Content Authenticity Initiative (CAI) and offering a one-click setting to preserve Content Credentials. Not long before that, Sony announced it was expanding its Camera Authenticity Solution via a firmware update for three of its cameras. Now, Google is rolling out its solution for photos edited with the Google Photos Magic Editor generative AI feature. The invisible SynthID watermark will be applied to photos edited using the Google Photos Reimagine tool. Images: Richard Butler Last week, Google announced that Google Photos will begin implementing a watermarking process for AI-edited images. The new process will rely on SynthID, "a technology that embeds an imperceptible, digital watermark directly into AI-generated images, audio, text or video," Google explained in the announcement. This tool will automatically watermark images that were edited with the Reimagine tool in Magic Editor. SynthID is a watermarking system produced by Google's DeepMind team. It automatically embeds metadata tags onto images, video, audio and text to identify whether they were created or edited with AI tools. SynthID is already being used on images created entirely with AI, such as those made with Imogen, Google's text-to-image model. It is also already used on text created by Google's AI models. While SynthID is potentially a step in the right direction, Google notes that some "edits made using Reimagine may be too small SynthID to label and detect — like if you change the color of a small flower in the background of an image." The results won't be perfect or all-encompassing, then. It's also important to note that there won't be any visible watermarks on the images. Instead, if you are curious whether an image used AI for editing, you'll need to use Google's "About this image" tool through Circle to Search or Google Lens. While it's certainly nice that you won't have a giant watermark across your image, it doesn't do much to make it quickly known that something was created or edited with AI. It doesn't seem likely that most people will take the time to verify an image's AI use if there are multiple steps involved. Another potential complication is that Google's SynthID watermarking system is separate from what Adobe is doing with CAI. The result is two different systems for flagging AI-created or manipulated images. At this point, there is no clear understanding of how (or if) these different systems will communicate.

|

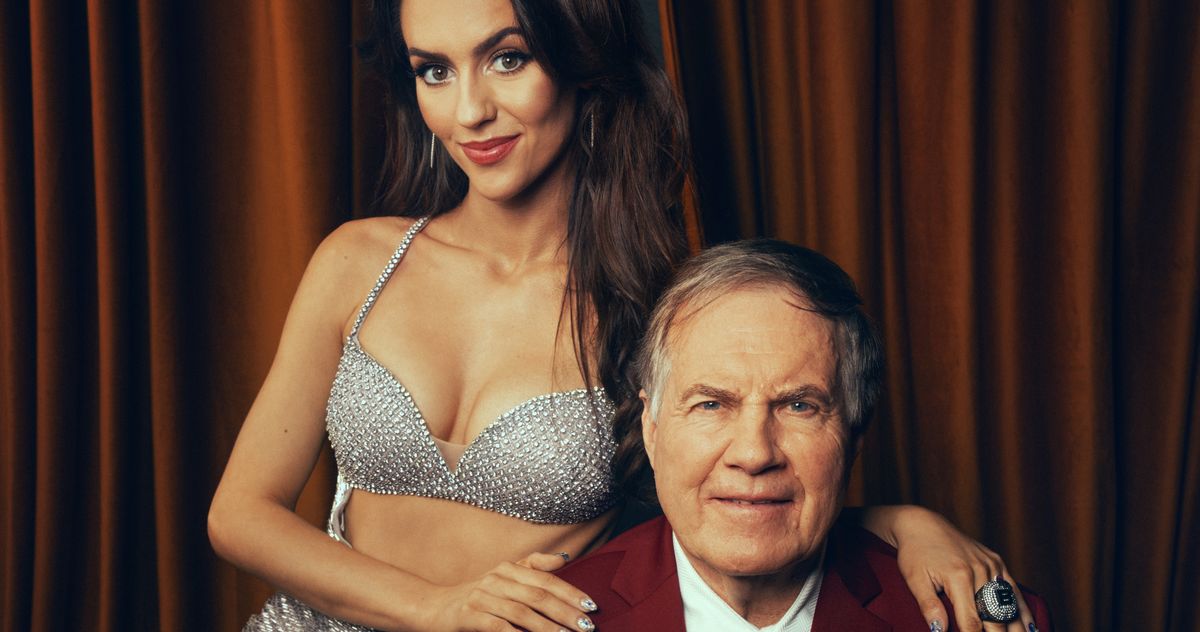

| Photo: Abby Ferguson |

Tools to authenticate images and disclose the use of AI are hot topics right now. Just last week, content delivery giant Cloudflare announced it was joining Adobe's Content Authenticity Initiative (CAI) and offering a one-click setting to preserve Content Credentials. Not long before that, Sony announced it was expanding its Camera Authenticity Solution via a firmware update for three of its cameras. Now, Google is rolling out its solution for photos edited with the Google Photos Magic Editor generative AI feature.

|

|

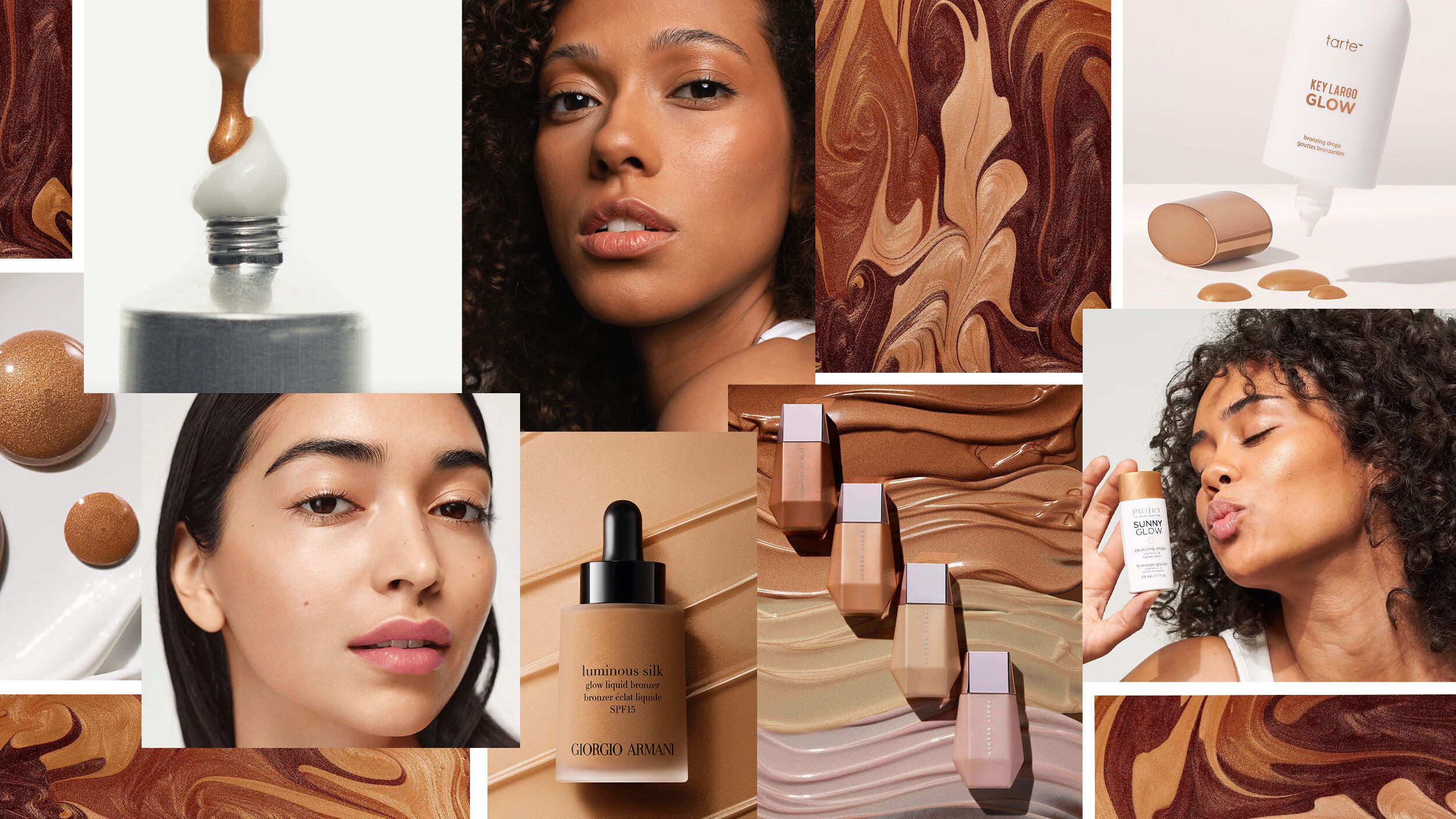

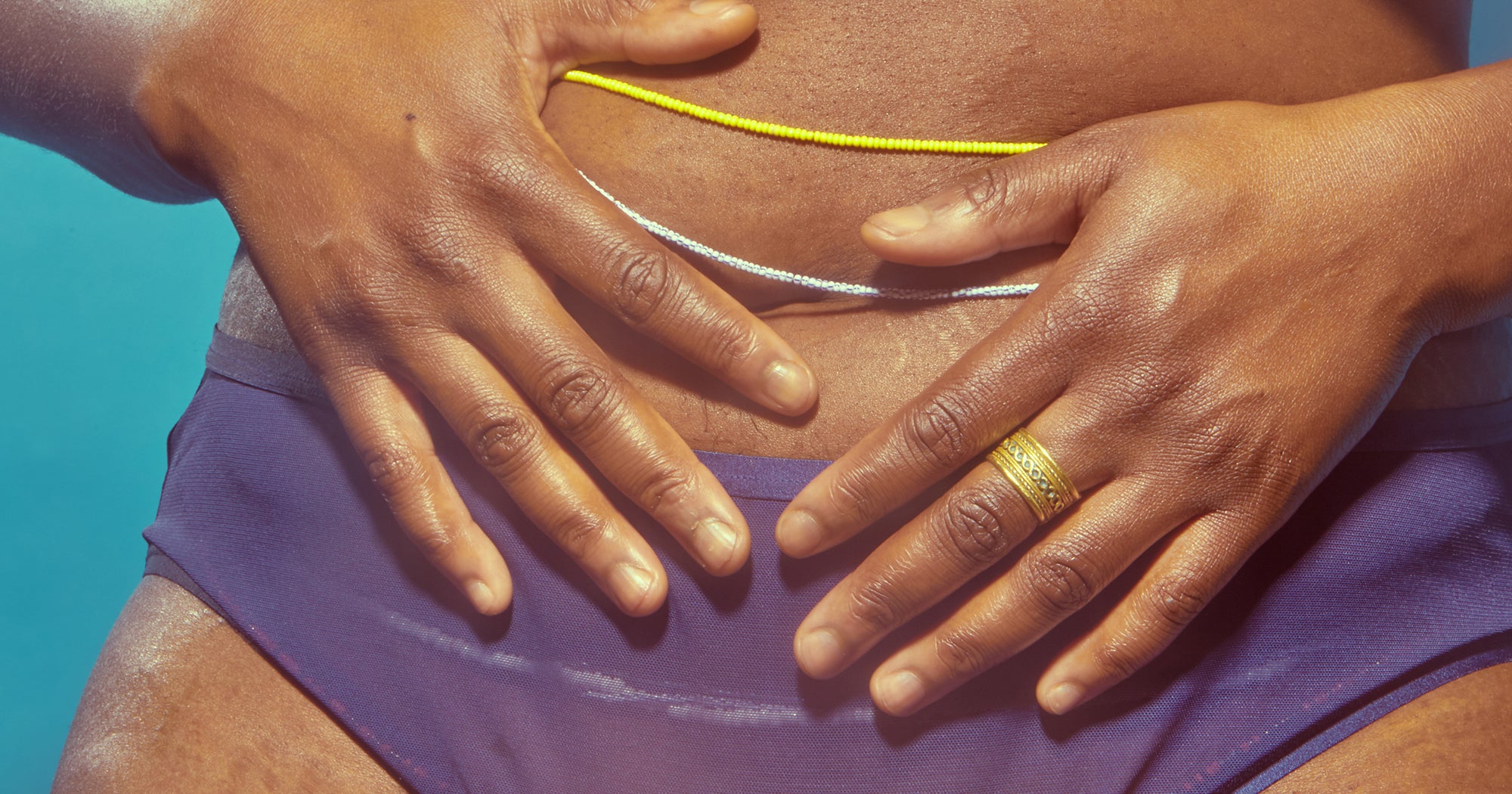

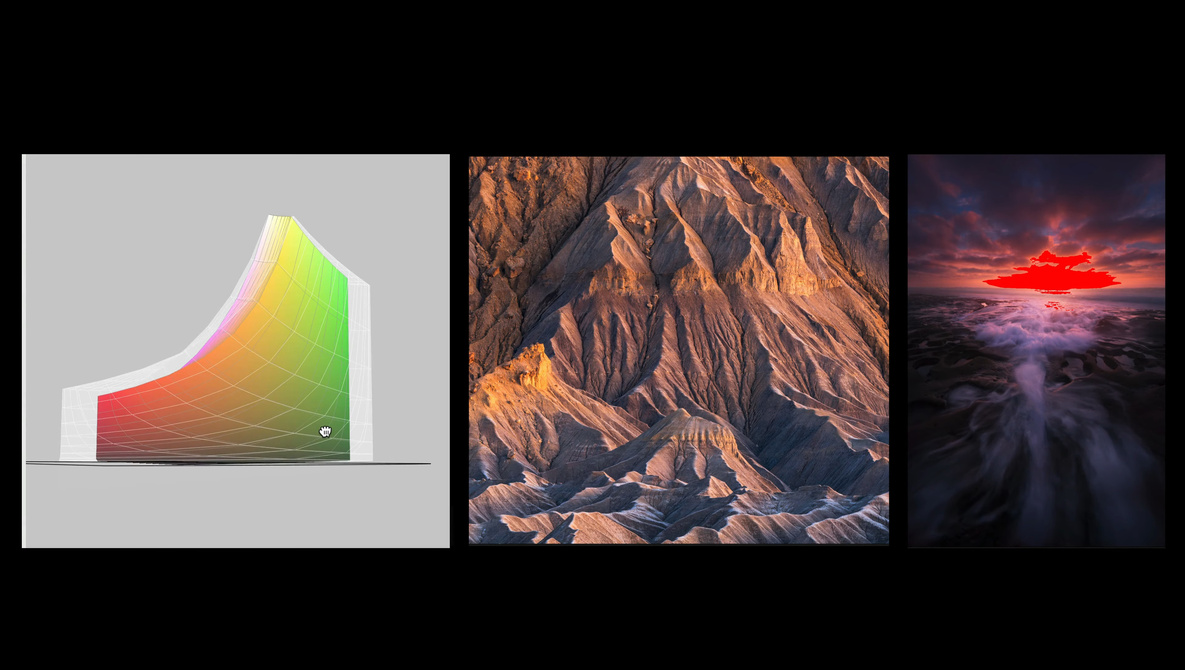

The invisible SynthID watermark will be applied to photos edited using the Google Photos Reimagine tool. Images: Richard Butler |

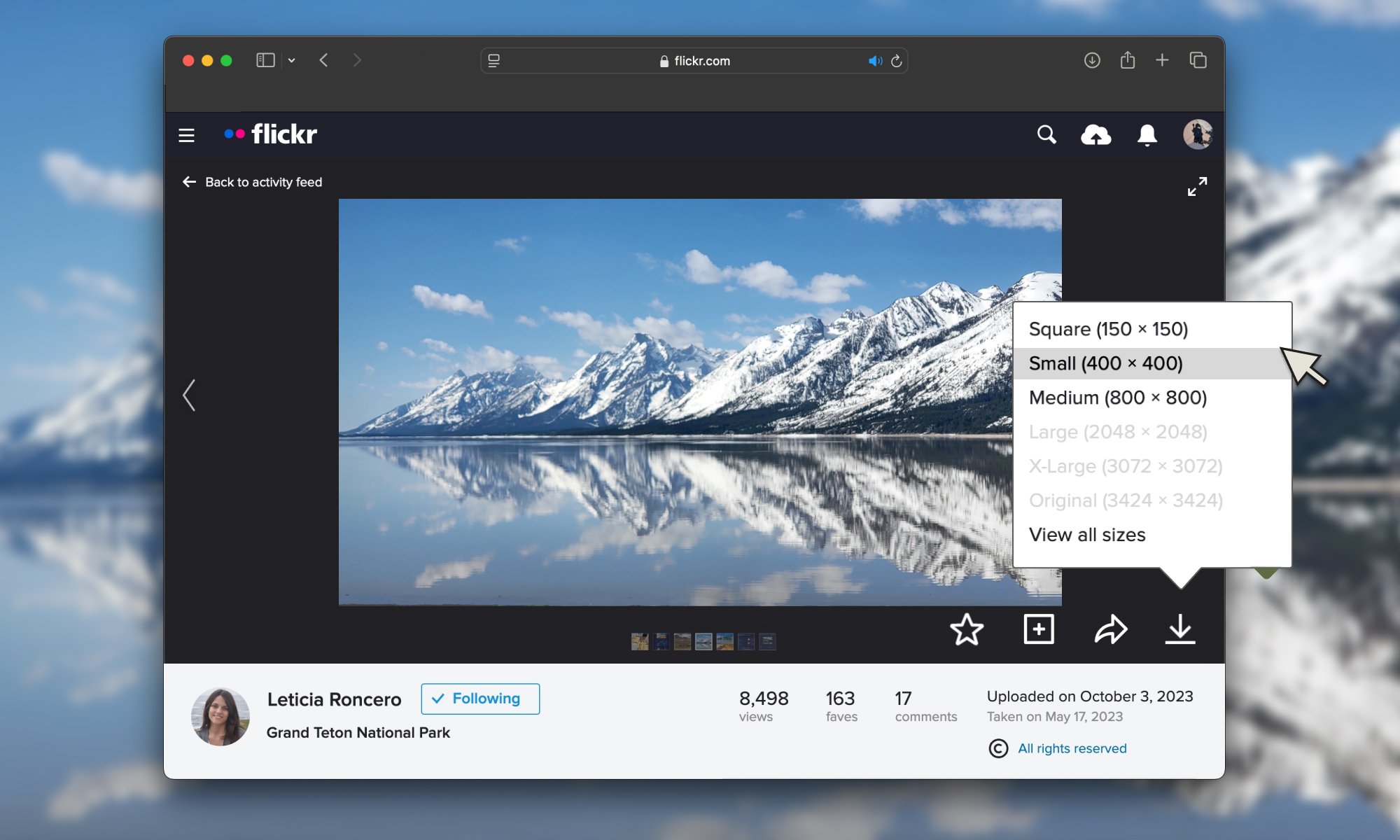

Last week, Google announced that Google Photos will begin implementing a watermarking process for AI-edited images. The new process will rely on SynthID, "a technology that embeds an imperceptible, digital watermark directly into AI-generated images, audio, text or video," Google explained in the announcement. This tool will automatically watermark images that were edited with the Reimagine tool in Magic Editor.

SynthID is a watermarking system produced by Google's DeepMind team. It automatically embeds metadata tags onto images, video, audio and text to identify whether they were created or edited with AI tools. SynthID is already being used on images created entirely with AI, such as those made with Imogen, Google's text-to-image model. It is also already used on text created by Google's AI models.

While SynthID is potentially a step in the right direction, Google notes that some "edits made using Reimagine may be too small SynthID to label and detect — like if you change the color of a small flower in the background of an image." The results won't be perfect or all-encompassing, then.

It's also important to note that there won't be any visible watermarks on the images. Instead, if you are curious whether an image used AI for editing, you'll need to use Google's "About this image" tool through Circle to Search or Google Lens. While it's certainly nice that you won't have a giant watermark across your image, it doesn't do much to make it quickly known that something was created or edited with AI. It doesn't seem likely that most people will take the time to verify an image's AI use if there are multiple steps involved.

Another potential complication is that Google's SynthID watermarking system is separate from what Adobe is doing with CAI. The result is two different systems for flagging AI-created or manipulated images. At this point, there is no clear understanding of how (or if) these different systems will communicate.

-Baldur’s-Gate-3-The-Final-Patch---An-Animated-Short-00-03-43.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)